Seeing without eyes: Image processing for robotic bin picking

July 2, 2020

By Teresa Fischer

How image-processing systems allow robots to see and recognize objects

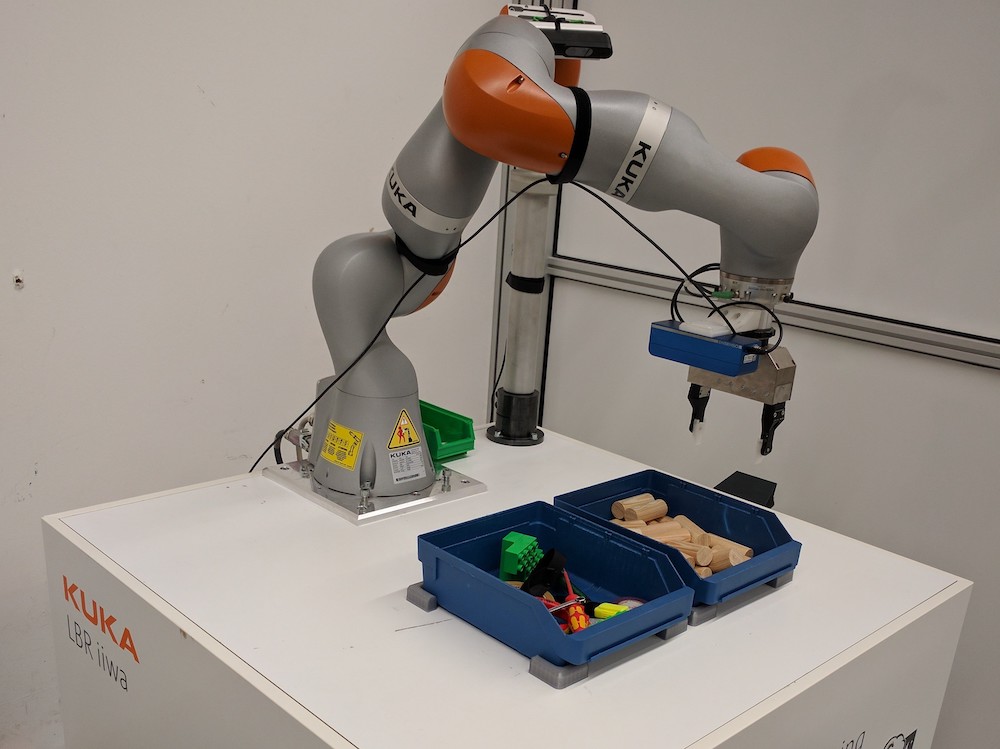

Photo: KUKA AG

Photo: KUKA AG The robot moves over a box with colourful building blocks of different shapes, deliberately picks up a yellow triangle and sets it down next to the box. This is a process that could hardly be easier for a human, but one that has posed major challenges for robot programmers since the 1980s.

Bin picking, as it is called, is one of the most difficult tasks in robotics. It is not the actual picking up and setting down that poses problems. The difficulty is in recognizing unsorted objects. This is because the robot lacks one of the most important human abilities: vision.

Seeing without eyes

The German Duden dictionary defines vision as “the act of perceiving (by means of the sensory organ eye).” How is a machine supposed to use this ability when it is lacking this sensory organ?

The solution is to be found in image processing systems. Image processing works in a very similar way to human vision: neither humans nor machines actually see the object itself, but rather the reflections of light bouncing off the object.

In humans, the iris, pupil and retina bundle and focus the light and present it in colours. This information is then forwarded to the brain. In a machine, these steps are performed by cameras, apertures, cables and processing units.

Perception is the difference

“Despite the many similarities between human and technological vision, there are major differences between the two worlds,” explains Anne Wendel, director of the machine vision group at the VDMA Robotics + Automation Association.

“The greatest difficulty is understanding and interpreting image data. In the course of their lives, humans learn the meaning of objects and situations that they perceive with their eyes on a daily basis and filter them intuitively for the most part. By contrast, an image-processing system only identifies objects correctly if they have previously been programmed or trained.”

The brain of a small child can distinguish between apples and pears just as quickly as between a cat and a dog. The same task is very difficult for a technological system.

Deep learning helps with recognition

To enable the correct identification of objects, there are software algorithms for a wide range of different tasks. In order to program these correctly, the developers of image processing systems must already know in advance what the system will subsequently have to achieve for it to be designed accordingly.

“Deep learning – the use of artificial neural networks – allows images to be classified with better success rates than previous methods and can be of help here,” says Wendel.

Good results can be achieved, particularly where standard applications are concerned. However, a large quantity of image material is required – normally far more than the production process provides, especially of defective parts.

Deriving actions from information

According to KUKA vision expert Sirko Prüfer, the combination of image processing and robotics goes a step further: “We actively involve the robot in the so-called ‘perception-action loop.’ It is not enough for us to capture the information from the image. We concern ourselves with what action can be derived from the information for the robot.”

In combination with mobility, this can open up new fields of application: from the robotic harvesting of highly sensitive varieties of fruit and vegetables to applications in the health care sector that require comprehensive recognition of a room.

Another major topic of the future is that of “embedded vision” – in other words, the direct embedding of image processing in end devices. One example is assistance systems in cars and autonomous driving, which are impossible without integrated vision systems.

Embedded vision is making inroads into fields of application that could not previously be tapped using smart cameras or PC-based systems. Value creation is shifting further from hardware to software.

A question of data protection

Whether bin picking, automated harvesting or the use of embedded vision, all these applications require a high level of capacity for the image processing. Edge and cloud computing concepts will play a pivotal role in the future.

Data protection and data security issues arise here, and ones in which image processing expert Wendel sees challenges: “As in many other areas of production, there is a fundamental question: Who owns the network? And the data? And the condensed reproduction of the data?”

This is an area that remains to be clarified, especially as the vision market grows in Germany and beyond (see above figure). The challenges demonstrate just how superior human vision and judgment still are to their technological counterparts. Even if bin-picking solutions are constantly improving: there is no substitute for the human eye.

Teresa Fischer is deputy group spokesperson for KUKA AG.

This article, which was reprinted with the permission of KUKA AG, appeared in the April 2020 issue of Robotics Insider, Manufacturing AUTOMATION‘s quarterly e-book dedicated to industrial robotics.

Advertisement

- Lighthizer celebrates USMCA, promises enforcement as trade deal comes into force

- Bosch Rexroth introduces online service portal for hydraulic systems